Information Technology (IT) Pioneers

Retirees and former employees of Unisys, Lockheed Martin, and their heritage companies

Software Engineering, Chapter 48

1. Introduction

Dr. Grace Murray Hopper is/was renown throughout the world as an early software developer, especially the tools to make it easier for those who followed her. Her AutoCoding paper pointed the way.

Sperry Univac Defense Systems and Unisys Defense Systems were developers

and users of MAPPER software beginning in the late 60's. Lockheed Martin

continued to use MAPPER software in their operations at the plant on

Pilot Knob Road in Eagan, Minnesota. Therefore it is appropriate to

include MAPPER history as part of our Legacy.

Some may recall the AS-1 assembler for the early 30-bit

computers. It was loaded into the Plant 1 Military Computer Center's

type 1206 from paper tape via the Friedan Flexo-writer until the 1240 magnetic

tape versions were developed. In 1962 Programmers used the

SLEUTH assembly system to develop 1107 software.

Then we started working with the Compiler System (CS-1), which include 'Macro' functions for the 30-bit computers. CS-1 evolved to CMS-2 for compiling and program debugging. A summary of these Navy software generation tools is in section 7. [lab]

In late 1964 Jorgen Anderson was developing a FORTRAN compiler for

30-bit machines using the 1206 in the Plant 1 Military Computer Center.

I was taking a U of MN FORTRAN class at that time, compiling programs on the

CDC 1604 at the University of Minnesota. Jorgen used my 'Gassy' program

to help debug the 30-bit FORTRAN software. Jorgen figured that when

he got the same printout results via the 1206 computer as I'd gotten

from the CDC 1604 at the U of MN; then the software was working. The

1218 and 1219 computers were initially programmed using the TRIM assembler/compilers

in the early 60's. There was an 1824 computer AS-1 assembler too,

I recall that Ken Van Duren was the lead programmer developing that

assembler. [lab]

In 1975

White Oak Laboratory generated a comparative description of

several

high level computer languages including the Navy's CMS-2 package.

2. ATHENA Programming for a Ground Guidance System - by Bob Russell

For any of you who are not familiar with a Ground Guidance System

type of missile launches, it is exactly as it implies. The launches

were guided into a trajectory or orbit by a ground based computer. The

United States Air Force contracted Univac to design and then to later

build such a computer, called the Athena. The original purpose was for

it to be used as the main guidance computer for the Titan defense system

which was to be installed in various parts of the continental United

States back in the 1950’s as our first line of defense.

To say the least, the Athena was complex and cumbersome piece

of hardware to write software for. It consisted of a rotating drum on

which the software was stored. Input of the software was accomplished

by loading the software from a paper tape (Mylar). The drum consisted

of 4096 computer words of memory which were divided into four sectors.

The rotating drum had to be taken into consideration when operating

the sequence of instructions which were located on the drum. The next

instruction had to be at least one location ahead of the preceding one,

except for the multiply and divide instructions which took considerably

longer. Also, one had to consider the jump to another sector of the

drum to pick up the next instruction.

Now, if any of you can understand what I’ve just told you,

you are to be commended. In order to prepare programs for the Athena,

everything had to be programmed on a general purpose computer called

the 1103 and later the 1103A. These were located in old Plant #2. Needless

to say, a large amount of software was required to support this effort.

A simulation of the Athena computer had to be developed to operate on

the 1103, along with simulated missile launch. Much additional software

was developed to assist the programmers in this effort.

During the initial phases of the Athena programming, the problems and

concerns of the drum were disregarded in favor of getting the equations

and logic resolved through what we call program debugging. After considerable

checkout and debugging, the Athena program was then subjected to what

we called “spacing” the program on the Athena drum for its

ultimate use in guiding a missile.

Place yourself in the shoes of a programmer in those days. Our

input was paper tape, our output had to typed on a flex-o-writer which

produced hard copy to assist the programmer in his software development.

And then one day miracle happened, the 1103 computer center obtained

a “high” speed printer. Now, don’t get the idea this

was like today’s high speed printers. This thing sounded like

a washing machine and printed about one line a second. But, boy, did

this have it over the old flex-o-writer which took many minutes to produce

hard copies.

Another one of our duties was to produce what we called a “simulation

tape” which was a Mylar tape containing all the commands and data

from a simulated missile launch. This tape was produced on the 1103

and when wound up measured approximately 6-8 inches in diameter. Now,

I don’t know if any of you have ever tried to pick up a roll of

Mylar tape of this size, but it is a challenge. It is slippery and it

is heavy. If you are not careful, the center of the roll will want to

obey gravity, and will commence to spiral downward like a tornado. Woe

be to the one who had to rewind this mess.

The simulation tapes were transported to places such

as Cape Canaveral and the tape would be placed on the Athena and run

like an actual launch and all the data on the simulation tape was then

compared to that generated by the Athena to assure accuracy. Do you

understand all this?

Many of the events I had previously reported occurred during

much of this development. Computer time on the old 1103 was shared by

several projects, thus demanding 24 hour computer time assignments.

Many the times we would draw late night blocks of computer time. Much

of this time was utilized for debugging of various support programs.

Often, when program corrections needed to be established, it meant getting

back in during the next day to prepare for the next nights run. Any

of you remember when they had the check-ins at the guard desk for being

late after 8:00 AM. How many of us wanted to tell these guys where they

could put it.

Lots of hours, but lots of fun. A lot of stress, when you think

back about it. It seemed like a lot of this stress was relieved by the

parties we had, picnics, and our company sponsored bowling. I’m

sure that many of you remember the travel to the “Cape”,

Vandenberg AFB, Bell Labs and many others. Such were the early days

of programming.

![]()

3.0 MAPPER Articles

3.1 MAPPER Development

A Mission Critical Legacy by Gerry Del Fiacco - Unisys Corporation

Software Engineering Manager

It is said colloquially that necessity is the mother of invention.

In 1968, the Unisys software product known as MAPPER was conceived and

borne to serve a need that persists and thrives to the present day.

Before describing that need, it is appropriate to appreciate the context

in which this software product emerged thirty-five years ago. In that

era, the essence of the computer systems business was the engineering,

building, shipping, installing and supporting of computer hardware.

Vendor-provided software was viewed as a necessary burden to sustain

that business. Business consulting, services, training and the like

were not much more than an afterthought. And, the hard-core engineering

community that drove the business of the computer industry in that age

had little tolerance or use for euphemisms such as information technology,

enterprise assets and business transformation.

My own experience with these phenomena began when I entered the computer

industry in 1965 after matriculating in the comfortable environs of

graduate school at the University of Minnesota. My first job in industry

was with Control Data Corporation working on a project headed by the

legendary Seymour Cray. He had a team of hardware engineers sequestered

in an engineering lab on his own property in Chippewa Falls, Wisconsin.

At that lab, he was fashioning what was at that time the largest computer

system ever envisioned thus far in the early years of the computer industry.

That engineering lab was located far away from the influence

of corporate headquarters in Bloomington, Minnesota. There was exactly

one telephone in the Chippewa Falls facility. It was a red telephone

mounted on the wall of the entryway of the lab, and it was to be used

only for emergency purposes. My software colleagues and I were more

or less viewed as intruders. We soon discovered that Seymour and his

engineers had written a basic operating system for his large-scale computer

system entirely in octal, that is, numeric machine language. Our first

job was to disassemble the octal code for that operating system into

symbolic assembler code. The resulting operating system became known

as the Chippewa operating system. Seymour also had disaffection for

other forms of software. It took months of high-level argument within

Control Data Corporation to convince Seymour that a FORTRAN complier

would be needed to make it feasible for the corporation to market his

new computer system. That system was intended to be sold as a scientific

number crunching machine. One would think that a FORTRAN compiler would

have been an obvious requirement. But, such was the undistinguished

fate of software in that era.

My awareness of Sperry Univac computer technology began in 1968

at the Jet Propulsion Laboratory (JPL) of the California Institute of

Technology as a customer of Sperry Univac computer systems. At JPL,

we helped NASA put spacecraft and astronauts on the surface of the moon

and we returned them safely to earth with the aid of IBM and Sperry

Univac computers using what now would be viewed as primitive software.

During a launch and mission, we would gather in the mission control

center at all hours of the day and night and we would pray that a bug

in our software or a breakdown in our hardware would not cause the loss

of life or the failure of the mission. Hence was born the term mission-critical.

Meanwhile, a mission-critical need of another nature existed

in 1968 at the engineering center and factory where Sperry Univac was

building its computer systems in Roseville, Minnesota. A complex of

four buildings spread over several acres of land and populated by thousands

of employees on three work shifts was required to house activities that

encompassed engineering, procurement, storage of parts, assembly, diagnostics,

testing, certification, packaging, order entry, inventory management,

shipping and receiving, accounts payable and receivable, customer support,

etc. Hardware technology was characterized by discrete components, an

infinite amount of wire, the lack of any subassemblies from outside

vendors, and the construction of massive hardware units. The primary

mass storage device was a flying head drum that resembled a coffin in

size and weight. Forklifts, oscilloscopes and slide rules were popular

tools. PC’s were unknown even in concept. Pencil and paper means

of planning and record keeping were routinely used. Filing cabinets

were precious resources. As the business grew and prospered, the rudimentary

methods of running the business that were being used became unmanageable.

In fact, this became the limiting factor on what could be accomplished

in terms of business volume, revenue, net income and customer satisfaction.

It wasn’t printed circuit layout, or power supply design, or robots

or any other aspect of sophisticated engineering or manufacturing technology

that was the major stumbling block in business growth and success. It

was the lack of an electronic means for planning, tracking and execution

of the technical, administrative and operational regimen for running

the factory.

From this milieu emerged the need for the software tooling that became

known as MAPPER. Its first important characteristic was that it was

conceived, designed, built and deployed by the end users who needed

it. And, it was constructed in a way that it did not require an application

software development organization to reside between the end users and

the problems those end users were trying to solve in their workplace.

This resulted in a rapid application development personality for MAPPER

that meets the needs of end users and that persists to this day as a

defining characteristic of the product.

From this milieu emerged the need for the software tooling that became

known as MAPPER. Its first important characteristic was that it was

conceived, designed, built and deployed by the end users who needed

it. And, it was constructed in a way that it did not require an application

software development organization to reside between the end users and

the problems those end users were trying to solve in their workplace.

This resulted in a rapid application development personality for MAPPER

that meets the needs of end users and that persists to this day as a

defining characteristic of the product.

Concurrently, there was a nice operating system taking shape

on the Series 1100 computer systems that Sperry Univac was building

in its factory in Roseville, Minnesota. Its name was EXEC8. I’m

proud to say that I was one of many members of the extended software

development team that created and nurtured the operating system known

today as OS2200. But, a demanding and varied workload of batch jobs

and online time-sharing terminals could bring EXEC8 crashing down easily

in its early years. Even well into the 1970’s, we had a saying

that the mean-time-between-failure (MTBF) of the operating system was

measured in minutes and that we would consider it an accomplishment

when that MTBF could be measured in some integral number of hours.

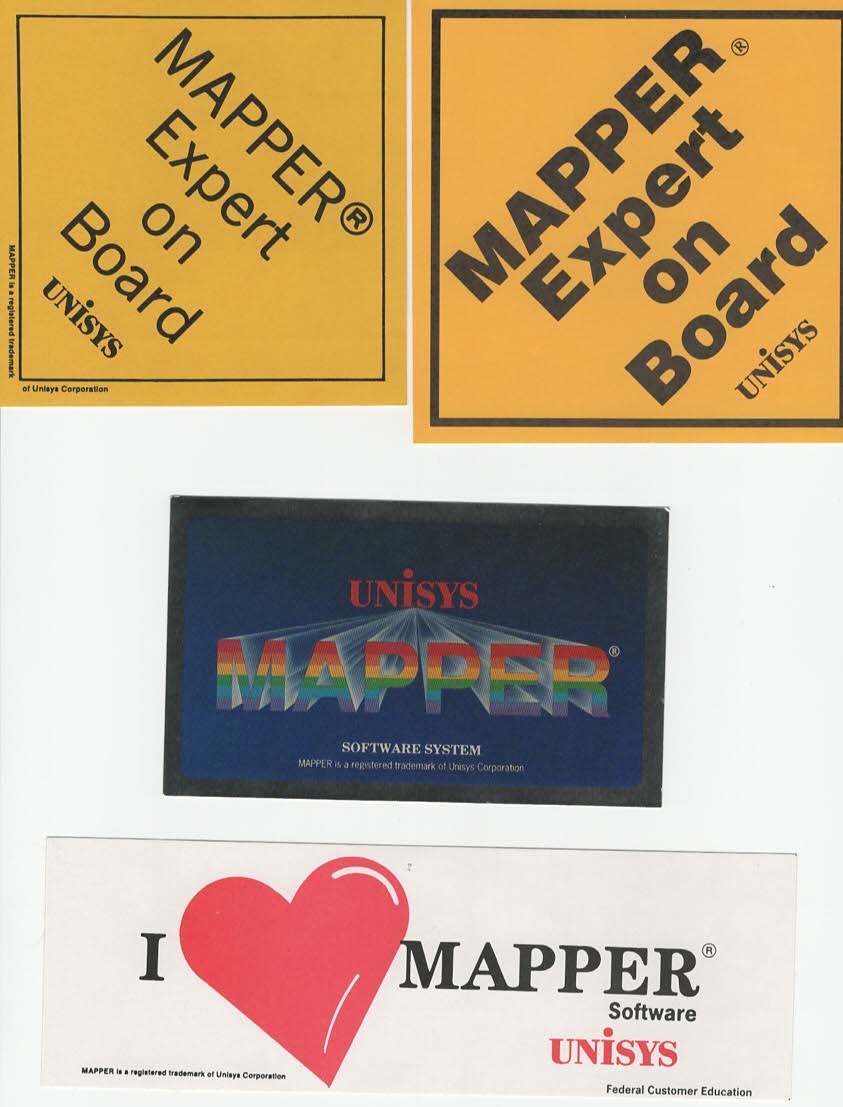

{Editor's note: Mapper stickers from Ron Shurson, Unisys

retiree.}

Accordingly, the team of factory software developers that conceived

of MAPPER committed to building it in somewhat of a self-contained and

self-reliant software environment that depended to a lesser extent on

the features and capabilities of EXEC8. This was done to ensure the

viability, reliability, responsiveness, data integrity, and trustworthiness

of the software environment with which the factory would be run. This

was a mission-critical venture. Ultimately, a multi-billion dollar business

enterprise and the demands and expectations of the Series 1100/2200

customer base came to depend on MAPPER exhibiting these industrial strength

attributes.

This approach required Series 1100/2200 MAPPER to incorporate

a rather comprehensive range of functionality, including many of the

batch processing, online processing, networking, security, printing,

database management, recovery, administration and operations capabilities

normally considered to be within the domain of the operating system.

Some argued against doing this as being too costly or ambitious. Others

said the cost of failure outweighed the cost of developing MAPPER in

this manner. The latter view prevailed. They soon were proved to be

right.

A few years after MAPPER was deployed internally, a Sperry Univac

customer observed MAPPER in operation during a tour of the Roseville

engineering and manufacturing plant. The customer demanded that MAPPER

be available on the Series 1100 system they were intending to purchase.

The rest, as they say, was history. By the late 1970’s, MAPPER

was a driving force in the sale of Series 1100/60/70 systems. Even today,

OS2200 MAPPER is deployed usefully at half of the 2200/Clear Path/IX

accounts in the worldwide Unisys customer base.

The self-reliant nature of the design of Series 1100/2200 MAPPER

afforded its developers great latitude in innovation because their advances

did not have to be negotiated and coordinated with disparate software

architecture, design and development organizations outside the MAPPER

domain. Because of this latitude, MAPPER pioneered many advanced concepts.

MAPPER invented client/server programming before the industry defined

it. MAPPER was one of the first software products from Unisys to achieve

X/Open certification. MAPPER stored, rendered and displayed digital

images on graphical displays before Bill Gates invented Windows. MAPPER

rendered data in dynamic, computable spreadsheet form before Lotus and

EXCEL existed. MAPPER was Heterogeneous-Multi-Processing (HMP) enabled

years before Unisys coined the term and baptized its Clear-Path platforms

with this name.

Building upon this strong history, much modernization has been

done in recent years, most visibly on the surface in terms of renaming

MAPPER as the Unisys Business Information Server (BIS) and of adapting

to a Microsoft Windows conforming environment for BIS administrators

and end users and for programmed implementation of BIS runs on Windows

workstations.

![]()

3.2 The MAPPER System Story from Lou Schlueter

MAPPER software has a unique and unexcelled history.

Its user-driven Report Processing concepts were originally defined over

30 years ago. Report Processing allows users to analyze report data

using the extensive, interactive MAPPER Information Power Tool set.

This processing is far more productive than simple document processing.

MAPPER software has been annually enhanced functionally to its current

level of over 110 user-executable Report Processing functions with over

700 options and a powerful application development environment. No other

software offers such a powerful set of capability integrated with a

real-time, report structured database with full networking and internet

capabilities.

MAPPER software has been upgraded to run on state-of-the-art

Unisys mainframe systems, Microsoft Windows and Windows NT systems as

well as UNIX, SUN, ATT, IBM RISC and OS/2 systems. Interfaces are available

to industry relational database systems such as ORACLE and INFORMIX.

MAPPER applications have also been Internet Web enabled with its Cool

Ice offering.

MAPPER software was the key factor in creating an installed base

worth over $2 billion for Sperry/Unisys corporations. The history of

this software and the philosophy that enabled its unprecedented success

is documented in the "VIRTUAL REPORT PROCESSING, The MAPPER Story".

REAL-TIME REPORT PROCESSING & USER-DESIGNED COMPUTING

The concepts of Real-Time Report Processing and User-Designed

Computing that MAPPER systems have provided still represent a new way

of using computers with essentially unlimited potential. These concepts

clearly have enormous end-user appeal as illustrated by the Sperry/Unisys

internal and customer experiences.

The ability to share real time reporting data and to be able to interactively

turn this data into control information using the programmer-less Information

Power Tools has a universal appeal. No comparable, user executable functionality

is offered by any other industry software systems.

The enormous success of MAPPER systems was achieved with minimal

promotion by Sperry and non existent promotion by Unisys. It still generates

over $20,000,000 in software revenue primarily from the 1100 mainframe

customer base. Under pressure from the remaining customer base, MAPPER

software is still supported and is being functionally enhanced. Most

of the expansion of the existing MAPPER Systems market was created through

word-of-mouth promotion. The users said, " There are two kinds of people

in the world, those that love MAPPER and those that don’t know

enough about it."

![]()

3.3 MAPPER User's Group

Dear current and former MAPPER-ites:

At the UNITE 2008 conference last week in Orlando, FL, we had a "MAPPER Museum" on the exhibition floor to celebrate the 40th anniversary of its conception - a chance to get out the old MAPPER artifacts that I've been saving for so long and put them on display. It was fun for all us old-timers who were fortunate to be there, to see that stuff again, and recall stories of the long and twisted history of MAPPER.

Hope you enjoy this trip down memory lane! John Thalhuber

3.4 MAPPER Code Cards, etc.

- Sperry Basic MAPPER Functions

- Sperry MAPPER Run Function Formats

- Sperry MAPPER Summary of Function Options

- Sperry Univac Basic MAPPER Functions

- Sperry Univac COBOL Program Development Subsystem - Series 70 systems

- Sperry Univac COSMOS Budget Information

- Sperry Univac MAPPER Run Function Formats

- Sperry Univac MAPPER Summary of Functions

4. NTDS

Early Memories of an Old Programmer by John M. Byrne 25 May 2006

After graduating from a major Jesuit university in the Midwest

in 1964, with a degree in math and physics, I took a job with the Air

Force’s navigational chart making organization. There I was first

introduced to using “computers.” This was mostly just the

manipulation of punch cards for record keeping and some coordinate transformations.

This appealed to me and in 1966 I applied for and was accepted as a “computer

programmer” at Sperry Univac. (Note that I did not have a computer

science degree and, in fact, my alma mater had no computer science department.

We had however heard that a math teacher was fooling around with a “computing

device” in his spare time.) Nevertheless, not even knowing what

a binary, octal, or hexadecimal number was (I’m embarrassed to

say), my programming career was launched.

As we were under the gun (defense department pun), within six

months the new hires (and there were many) and I were turning out programs

for the Univac 1206. The 1206 was either the first or one of the first

shipboard ruggedized computers to be deployed in the U.S. Navy’s

fleet. We were producing prototype software for the DLG-16 destroyer,

which was to be later turned over to FCDSSAlant for operational deployment.

These command and control programs were to provide a very specific service

to the fleet – that is to take in sensor data, refine it and display

it to an operator, and bring weapons to bear on hostile contacts.

These were some pretty basic computers. We did have a high level

of language to program in (CS-1), but because the computers were so

slow (6 mils to just load a register) and we were required to process

radar data and weapon assignments in real-time, more often than not

we were required to drop to machine language to get the speed. Assembly

level language can be tricky and error prone. Corrections made on-line

needed to be keyed in, in binary, on the machine. On-line corrections

were made because program recompilation was a tedious and time-consuming

business. Not the method of today’s modern computers.

Check out of the software (debugging) was a problem. Actual shipboard

radars and weapons were not just lying around waiting to be used. Equipment

labs at Mare Island Naval Base were set up this purpose. However, scheduling

time to use actual radars and launchers was at a premium at the base.

Getting controlled aircraft flights within the radar’s coverage

was even tougher. To help with this problem, a technique was used which

is common place today, but new in the early 60s – simulation.

Computers were programmed to respond as would the actual radar, missile,

gun, …etc. This simulation allowed the prototype operational programs

to be checked out in a lab environment at a fraction of the cost of

using live equipment. Later, these simulations were provided to the

Navy labs for maintaining the software after deployment. Not bad for

a bunch of kids who didn’t know what a computer was a few years

earlier.

When actual equipment had to be used, time was scheduled at Mare

Island. As schedules were tight, the lab was scheduled 24-7. Typical

rotation was three weeks at Mare Island; three weeks back at home to

get ready for the next trip. It was tough on the employees and their

families but I remember few complaints. It had to be done, so it was.

Sperry was turning out the most reliable equipment in the industry.

Being the best didn’t mean there were no problems. These were

some of the first computers ever built. There were problems. Hardware

field engineers were always at the ready, but finger pointing was always

an issue between the software types (programmers) and the hardware types

(field engineers.) Introduce all of the other systems aboard the ship

that interfaced with these early computers and you start to get a picture

of how severe the finger pointing could get. This extended to other

companies who were providing equipment, including the radar, launcher,

missile, gun, and displays. The U.S. Navy (NAVSEA, NAVORD) oversaw all

of this. Meetings in Washington, DC, were often and heated.

To say the earlier days of the first computers being brought

into use by the U.S. Navy were easy because the computers were so simple

would indicate that one didn’t understand the problem. Reality

is that in spite of all of the problems and complexities everyone knew

that he had to get the job done or answer to a lot of U.S. Navy commanders,

captains, and admirals. The bottom line was that no one wanted to be

the person, group, or company that held up deployment of a U.S. Navy

ship or aircraft. Remember that this was at the height of the cold war.

The departments at Sperry Univac all took this to heart. More often

than not, it was not corporate management making these trips to DC,

but the engineers. Engineers were writing the specifications, proposing

the job, performing the job, and making and supporting the deliveries.

Engineers and naval officers were definitely on a first name basis.

Seeing the spin that is put on today’s problems between government

organizations and the public (e.g. FEMA and Katrina, classified information

leaks, oil for food abuses, etc.) I can’t help but believe that

our relationship with the U.S. Navy in the 60s and 70s was not too bad.

Actually it was damn good although at the time it didn’t seem

like it was. The Navy was feeling its way through the difficulties of

implementing this new technology also. Add to that the rate at which

these emerging technologies were changing and I’d say we (Sperry,

the Navy, competitors, sub-contractors,...everyone) did some pretty

good work back then.

I am proud to have been a part of the early days when computers

were first put on Navy ships. I’m sure all of the guys I worked

with back then have similar stories to tell and are just as proud of

their contributions as they should be.

5.0 Computer Aided Design

This topic has been moved to the Engineering Chapter, section 6.0 on 3/30/2022 [LABenson]

6.0 Simulation Software Development by Michael Pluimer

I worked for Sperry 1969-79, 1982-88 and Unisys 1989-92 as a Senior Management Consultant.

I was the Design Methodology Manager on the Micro-1100 Project which

was perhaps the most radical and innovative of Sperry's mainframe programs.

It was a large risk and leap into the unknown. The program was moved

to an offsite office building in Eagan to isolate it from every day

company operations. Many of the people mentioned in the article were

recruited to work on that project as circuit designers, including Mr.

Breid.

There were a lot of people involved in developing these tools and methodologies,

including myself. The entire project is also documented on a Sperry

produced video celebrating the product that was named Swift. It culminates

with a recognition of ENIAC's 50th anniversary. J. Eckert gave a speech

and commented on what the Micro-1100 project meant to him.

After the Unisys merger, the software, tools and methodology were transferred to a group in Philadelphia where several more mainframe chip sets were developed.

It just seemed a bit trite to attach the tools and innovation to a single person. More than likely, just a simple misunderstanding. What Mr. Breid did was quite an accomplishment for the program listed - but make no mistake, the bulk of the Hardware Design Language (HDL) innovations had occurred much earlier.

I think we have to be careful with CAD, tools, software and methodology.

The Ulysses HDL wasn't the first HDL in Sperry, not by a long shot. There were HDLs around when I joined the company in the late 60s. Compound that by the fact that Sperry was diverse - each division had a CAD department which had success with this project or that project.

When I was hired to help start the Micro-1100 project in 1982, one of the first tasks was to assess tools and methodology across the corporation. There were mature systems and HDLs in Defense, Blue Bell, Salt Lake City, Roseville, etc. As you may know, it is quite difficult to make such assessments in the face of local bias, political or personal aspects. We concluded that none of the "in-house" approaches would be adequate to meet the scope and scale of the MIcro-1100. There were arguments, some very loud, and perhaps to this day they may continue.

We faced a similar task after the Burroughs buyout of Sperry to form Unisys. Senior management wanted "tool merger" - decide on the "best" tools to eliminate territorial CAD systems. This was even more difficult - methodologies, HDLs, languages, etc. were as diverse as the design teams that used them.

Over the years, I have made an analogy of CAD discussions to the old beer commercials where people would endlessly argue "tastes great!!! " vs. "less filling!!! " ..I have a personal philosophy about such things, but that isn't important here.

What is important is the following ..

- Ulysses was developed for the Micro-1100 Project. It was unique by many standards and provided several "new" capabilities not found in other HDLs at the time. It's scope was broad and deep.

- Ulysses proved to have advantages in developing large scale systems, particularly in the capability to run system verification software and multi-processor systems.

- It wasn't the first HDL in Sperry - it was merely "one along the way" for a particular task - VLSI / 1100 design.

- It was further deployed (and developed) in Blue Bell for several more projects.

- Duane Breid developed/wrote a personal version of Ulysses and subsequently used this version in a 16 bit processor design within Defense Systems. This too was successful.

Historically then,

- Sperry had in-house HDLs and HDL methodologies in most every division, since the late 60s

- Ulysses was developed for VLSI projects such as "1100 on a chip" designs

- Ulysses extended the scope and scale of HDLs

- Ulysses and it's methodology were developed by Dr. Hendry, M. Bulgerin and others in Micro-1100

- Mr. Breid developed his own version of Ulysses for Defense Systems chip designs.

7.0 Navy Compiler history by Clyde Allen

In September, 1957, I joined Remington Rand Univac and was assigned to the NTDS department under Seymour Cray. Univac was under contract to build a transistorized computer for the U. S. Navy.

As the USQ-17 was being invented using preliminary prototypes named Magstec and Transtec, it became obvious that programming these computers was to become a major challenge. A group was put together in Dr. George Chapin’s NTDS department and named this group Advanced Programming. It was headed by Mark Koschman and included persons like Larry Krak, Phyllis O’Toole, Dick VanderSteeg, Bob Thurston, Walt Haberstroh among others. The task was to automate the programming effort. To that point, computer code was hand entered in binary directly into the hardware registers.

One of the first labor saving efforts was to develop a program that interpreted punched paper tape prepared on a Flexowriter and loaded the computer. A great time saver, except if one made an error in preparing the tape, there was no correcting it—one started over.

Working with the people in the Navy’s NTDS department, such as Joe Stoutenburg, Eric Svenson. Capt. Ed Svendson, Capt. Parker Folsom, Don Ream, Paul Hoskins and many others, the first automated assembler of mnemonic code and virtual addressing was developed. This was the beginning and named Assembly System-1 (AS-1). From there Compiling System 1 (CS-1) was specified and became the Navy’s programming language supporting its proprietary computer architectures. There were many iterations of this system through the years as features were added to make the compiling process more efficient as well as to account for the technology changes in the hardware. Some of the names assumed were CS-2, CMS-1, CMS-2 and all supported the computers such as USQ-17, USQ-20, UYK-7 and UYK-20.

Adoption of CS-1 as the Navy’s standard language was not without controversy. Dr. Grace Hopper also a Univac employee and Naval reserve officer was inventing and promoting COBOL as the answer to the world’s programming problems. This language was quickly proven to be best suited to business applications and not considered for real time applications. Also an international community was adopting an algebraic language (ALGOL) for scientific applications. The Naval Electronics laboratory under Dr. Maury Halstad in San Diego promoted a dialect of ALGOL called Naval Electronics Laboratory IAC (NELIAC). Many heated debates were staged by NEL experts and Univac experts helping the Navy decide on which was better—NELIAC or CS-1. These discussions were political as well as technical in that both developers and their sponsors in the Navy had investment in the decision. CS-1 was declared the Navy’ standard and enjoyed remarkable stability and usage while the proprietary computer architecture was employed.

At the same time as the Navy was surging ahead with its programming automation, the Air Force was also moving out. At the System Development Corporation’s laboratory, a brilliant young engineer by the name of Jules Schwartz was adopting ALGOL into a standard for the Air Force. This language was named JOVIAL (Jules Own Version Of the International Algebraic Language). While some discussion in the Pentagon was held as to why two languages were needed, no action occurred to change the rush to program operational systems as fast as possible.

Univac and its succeeding company profiles can be extremely proud of the computer systems and software developed and employed in the NAVY. From those primitive beginnings in the late fifty’s through today, these systems have served the USA well by providing the tools necessary to protect our country’s shores.

The Bit Savers web site has preserved some documentation of these

support software tools, Keith Myhre has scanned some reference cards

too:

![]()

- CMS-2Y Programmers Reference Manual for the UYK-7 and UYK-43 computers

- CS-1 Compiling system Operating Instructions

- CS-1 Operations & Input - Mono & Poly Operations

- CMS-2 Language Reference Booklet

- CMS-2M Language Reference Booklet

- Common Program Debug Module Functions (32-bit)

- NTDS Compiling System (CS-1) Repertoire of Mono-Codes

8. Early 1103 software

From: George Gray To: Smith, Ronald Q Subject: Early Software for the 1103

MIT's (the world's?) first Operating System (1954) -

John Frankovich and Frank Helwig wrote, for Digital Flight Test Instrumentation project (DFTI) and general Whirlwind use, the Director Tape program, to use the Control-room Flexowriter's mechanical tape-reader in parallel operation with the new fast photoelectric tape reader (PETR, installed Fall 1952) to read DFTI's huge, spliced-together paper tapes, with emulated manual switch-settings, post-mortem tests, etc. interspersed and automatically controlling the computer -- operator-free.

John Ward, Project Engineer, and Doug Ross Lead Programmer, used digital instrumentation hardware and Whirlwind computing to evaluate the effectiveness of the tail turret for the B-58 Hustler supersonic bomber, but mounted on B-47s with F-86 fighter nose strobe target -- on serial #3 ERA 1103 36-bit word computer at Eglin Air Force Base, Florida. $10,000/hour flight-test costs! Frankovich and Helwig also wrote a symbolic assembler, based on Digital Computer Lab's Summer Session software, generating 1103 tapes, which then were flown to Florida for debugging and use. Ross wrote 'Save the Baby' interruptive checker and patch assembler on the 1103, for quick fixes on site. ERA had no software; Flexowriter and plug-coded relay box punched 7-bit machine-code tape for input. Servo Lab's shop built elegantly simple manual winders and roller-lined, high-sided, deep-pocket tape holders for use with 200 cps. photoelectric tape readers, both places.

[Contrary to Computer Museum mockup of Whirlwind's Control Room, the on-line Flexowriter keyboard never was used for input! Only the 10-cps printer and tape-reader and tape-punch were live -- as was Operator Joe Thompson, manikin-rendered in the exhibit.]

References: A Personal View of The Personal Work Station: Some Firsts in the Fifties Invited paper for the ACM History of Personal Workstations Conference, January 1986. In A History of Personal Workstations, A. Goldberg, Ed. m New York: ACM Press/Addison-Wesley, 1988, pp 51-114.

Reported By: Doug Ross

In this Chapter

- Introduction [left]

- ATHENA by Bob Russell

- MAPPPER Stories:

- MAPPER Development by Gerry Del Fiacco

- MAPPER Summary from Lou Schlueter

- MAPPER Users Group from John Thalhuber

- MAPPER Code Cards

- NTDS by John M. Byrne

- Computer Aided Design

- Simulation Development by Michael Pluimer

- Navy Compilers by Clyde Allen

- Early 1103 software by Doug Ross via George Grey

Chapter 48 edited 7/13/2025.